How do you scale your backend? [Poll]

If you have stories to share or know what is the best practice, I'd be glad to know that too!

Trending on Indie Hackers

If you have stories to share or know what is the best practice, I'd be glad to know that too!

using cloud services - CosmosDB & Azure serverless functions.

As an indie hacker, imo there's isn't enough time to be worrying about load balancing / server deployment topologies - use the cloud services that do this for you, and ship features faster ;)

Prob. a little different once you've reached scale and have the revenue to be worrying about cost optimization.

True! Serverless is one thing I want to look into. Then, Vercel backed by serverless functions sound like a dream team

AKA Scale Up vs Scale Out.

I was also going to say serverless provides OOTB scalability and effectively both out & up (just being careful to keep an eye on costs).

If not serverless, its the politicians answer - it depends. Your question is how, but it comes down to why.

It will depend on why you need to scale to determine what exactly you should scale, and it will depend on your architecture what you can scale.

For example, if you have a monolith app you can scale up and out (providing you need and have session/state management) but it'll obviously be more cost inefficient.

If you have implemented a microservices architecture, you will be able to scale (out) the individual services that require scaling rather than the whole app.

If you need heavy computational power and parallel processing is not possible (perhaps due to resources or state) then scale (up) your server accordingly.

For me it's generally been a weak single server with a reasonably efficient tech stack. My own side project runs on a $5/month VPS and has hit the front of HN and never even reached 1% of what it can handle under load testing. If I get traffic over 10% of what it can handle, I'd upgrade hardware. If it's necessary to upgrade hardware to anything close to the limit, I'll scale horizontally. That will never happen with my current project, though.

FWIW, Stack Overflow scaled to millions of monthly users on a single server running on late 2000s hardware. They talked about it quite a bit in their old podcast.

The real question is, should you even be worrying about scaling now?

Which VPS you recommend or use?

It doesn't matter much. I use Digital Ocean, but Linode or many other alternatives would also be fine

That's true, not doing any pre-optimization. Just wanted people's opinion on it :)

I use CapRover on a Linode and will do vertical scaling for the foreseeable future. I personally believe that you should try to keep things as simple as possible. Especially in the beginning. Another important factor for me is local development. I should be able to spin up the whole thing w/o much hassle on my machine.

My professional background is in Cloud Computing (Serverless and some Kubernetes) but on side projects I very much prefer to hit everything with the simple stick. K8S and friends might be great but TBH I saw it overused way too often (including myself in retrospective).

My current stack is Python (Flask or Django) + PostgreSQL. Python because of ML. But everything Node.js, Ruby or PHP related is also fine.

Pick what works best for you and see the technology aspect as a means to an end.

You can still scale things out later on once you hit the jackpot. A lot of companies went down that route. See e.g. https://engineering.shopify.com/blogs/engineering/e-commerce-at-scale-inside-shopifys-tech-stack.

Hey I used caprover before but I found it a bit buggy, especially the UI. How's it going for you so far?

Hey, thanks for sharing your experience. I must say that it's pretty stable so far. No UI glitches or anything of that sort. I also looked into alternatives (Dokku comes to mind) but found the simple setup and manageability via a built-in UI appealing.

When did you use CapRover? Do you remember which version it was? I'm running

latestwhich is 1.7.1.How does your environment look like right now?

The good thing is that it's "just" a wrapper around Docker itself. So if you're struggling with something you can hack your way out using your Docker knowledge. Another benefit is that there's little vendor lock-in. Once scaling becomes a real issue you can take your containers and put them on a (Kubernetes) cluster.

Yeah I think its a step in the right direction but I had a few problems with it. I'm sure it was a fairly newish version although I can't remember the exact number.

It had a issue with the setting up the domain part glitching out. You need to refresh the page for the settings to apply.

But the straw that broke the camel's back was when it crashed while I was setting up a new project and it took the whole server down including other services with it.

That's when I gave up and switching to render's service.

Interesting 🤔 That's good to know!

I've deployed a couple of apps and wasn't running into any issues, so fingers crossed. Is https://render.com what you're referring to? Looks quite compelling.

Yep, that's the one.

Are we in 2005 again?

There are sooooo many cheap cloud services that saves this hassle

You don't want a single point of failure, use at least 2 servers (or use a cloud platorm/FAAS)

I recommend getting started with single server, but with reliable hosting provider. That way you can keep thing simple. My current setup is more complicated because I run apps on cheap hosting that occasionally go down, thus required me to run load balancing with multiple backend instances.

It really depends based on the type of site you are running and whether you think your ultimate traffic level or resource requirements (CPU, space, memory, etc.) will exceed the capabilities of a single server.

In years past, I either used one powerful server or two powerful servers, one for web and one for the database, for each of my projects. However that changed once virtual server hosting became more available and viable.

With virtual servers you can create a scalable foundation from the start so you are prepared if/when your traffic increases, especially during traffic spikes from those rare "front page link" type of traffic surges.

It takes more work to develop your software for multi-server use from the start, but it also means you are doing it before you have a lot of traffic which gives you time to solve any issues and tweak it for better performance without irritating a lot of your users.

That said, for most MVP projects one server is all you usually need through the validation phase.

A serverless/FaaS answer would have been nice (2nd one could fit but still quite different)

Firebase should be an option haha

All on AWS Elastic Beanstalk here. A bit of work to set up initially, but the load balancing and fail safes built into their ecosystem are fantastic. I can sleep easy at night.

It's definitely possible to scale up quite a bit with a single server.

Here's a side project where every single request is dynamically generated.

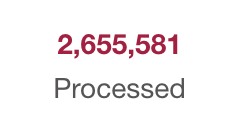

Number of jobs processed in the past 4 days alone:

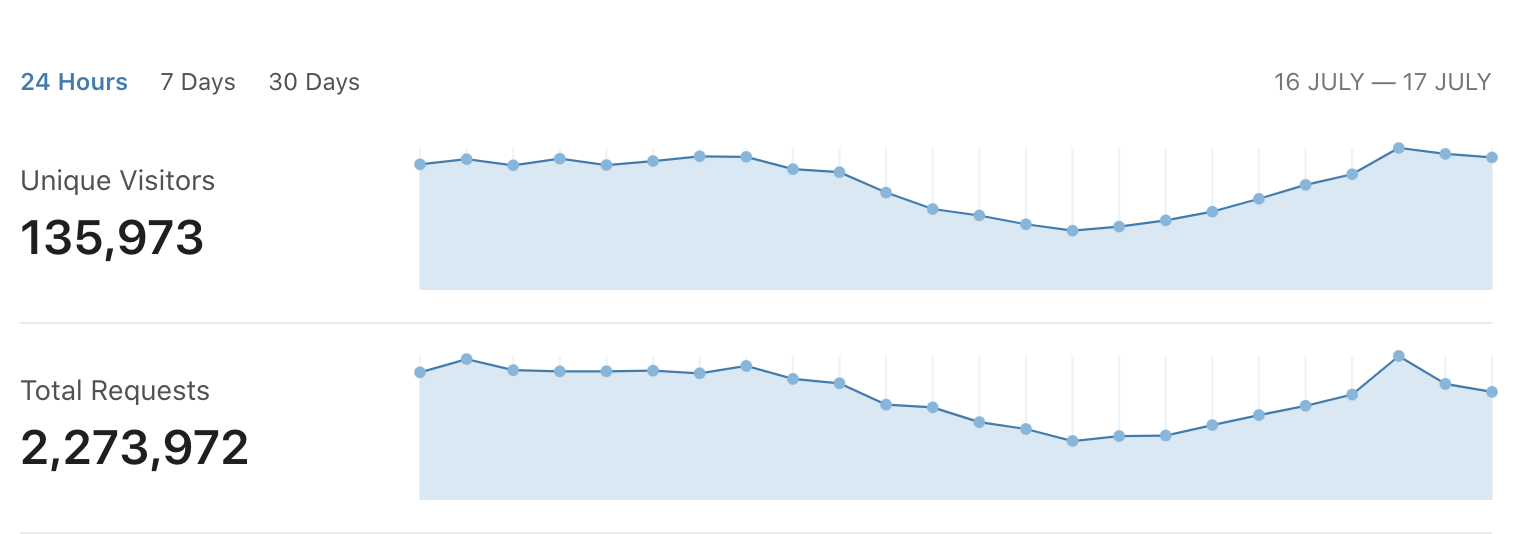

Number of uniques and visitors in the past 24 hours:

Previously, this was hosted at one of the usual popular VPS. Cost about $200 USD per month. Moved it to a dedicated server (very beefy, with 32 GB of Ram) for way cheaper -- like $35 per month (~83% cheaper).

Over the years, I've swayed between VPS and Baremetal dedicated. I'm in the baremetal phase again right now. I think cost:performance ratio is hard to beat if you don't mind getting your hands dirty with server admin.

Oh boy!

We are running three autoscaling gke clusters, one of them for development purposes.

That leaves two for production, one in Europe and one in the US. We are using a Anthos Ingress to be able to direct traffic to the cluster which is closest to the client.

The databases are replicated across the two clusters, and we have a redis cache in each cluster to minimize repeated read latency, with a queue system across the clusters to be able to invalidate the other clusters cache if a element is updated on one side.

Otherwise the cluster consists of netcore based microservices. Deployment is all automated.

The client itself is distributed from the same load balancer, but with path based rules that routes to a cloud storage container instead of to the cluster, and is replicated using CDN.

You don't have Serverless as an option. That's usually my goto these days. I'd rather not have to worry about managing servers. AWS Serverless related products such as Lambda, SQS, SNS, Cloudwatch has been working like magic for our startup so far. Though we've only been in beta for about 2 months now. It has worked flawlessly so far and I don't have to worry about any servers going down :)

I'm using Google AppEngine Standard, and it autoscales depending on the volume. Also it has a free tier. So far, so good

You missed a poll option:

O I don't know yet

haha, fair enough.

Heroku :)

nice, doesn't it get costly at high scale?

Yes, but I was using elasticbeanstalk before and just fighting to keep the site running. It is a reasonable trade off for me

I use Kubernetes.

Strictly speaking, "A single server with powerful specs" is not scaling. It is more like 'anticipating', unless you are somehow responding to increases in traffic by reconfiguring it as you go.

"A single server with powerful specs" is not really scaling, as there's a limit to how far you can go, the only real way to scale is the ability to scale horizontally by adding more servers.

That being said, in most cases one server is enough, unless your task require high computing power or you have millions of users/requests.

Horizontal scaling all the way. You can upgrade your servers just so many times and some point, at a certain scale, you will hit a wall and will be way harder to scale horizontally if your app is not built with horizontal scaling in mind (eg. it stores media and static assets locally instead of a centralized CDN location, or manages some resources locally, eg. some long-running scripts and depends on picking up what they output at any moment, instead of separating the asynchronous processing from the main web server).

Nowadays, I containerize everything and it's just so much easier to deploy things (I sometimes use kubernetes which makes everything way easier) or just have an ubuntu instance with docker installed and I don't care about installing and managing other dependencies.

This comment was deleted a year ago.

As I've painfully learned...