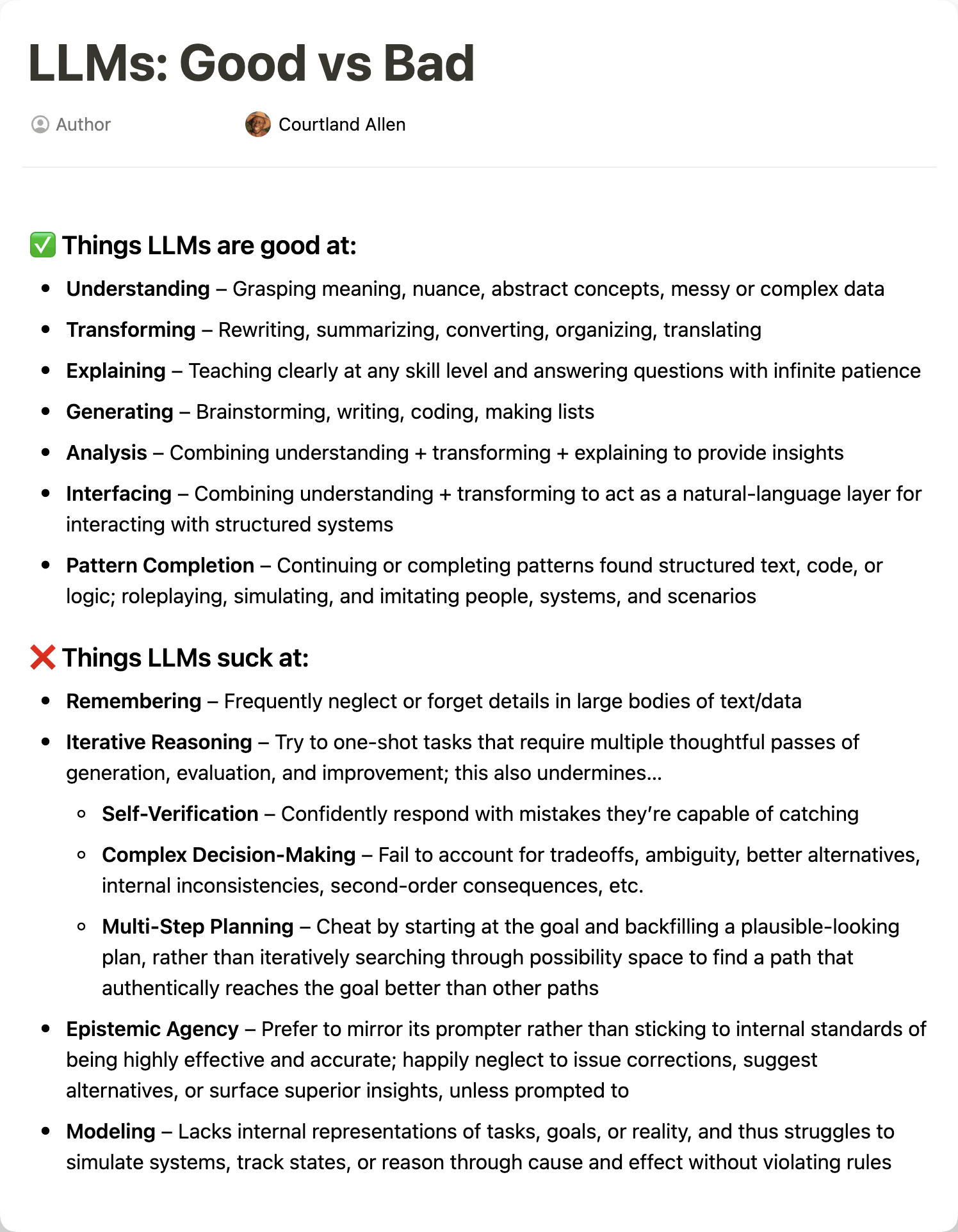

Here's my cheat sheet for playing to an LLM's strengths when prompting and avoiding its weaknesses as well.

Read over it when you're frustrated with how prompting is going.

Eventually you'll have it mostly burned into your memory, and you'll naturally lean toward using LLMs more effectively:

You can download it as a PDF here: https://storage.googleapis.com/indie-hackers.appspot.com/info-products/LLMs%20Good%20vs%20Bad.pdf

HOW I RETRIEVED MY BITCOIN WITH THE HELP OF/ THE HACK ANGELS RECOVERY EXPERT.

I just want to take this moment to appreciate the effort made by THE HACK ANGELS RECOVERY EXPERT and his team in recovering my scammed Bitcoin . Have you by any means invested your hard earned funds or Bitcoin with an Investment and later you find out you have been duped, and you would wish to track down and recover your funds. I am recommending them to everyone out there who have been defrauded too by these fake Bitcoin investment platforms. You can reach out to them with the information below: at

WhatsApp +1(520)200-2320)

They helped me when I thought I had nothing left to do.

HOW I RETRIEVED MY BITCOIN WITH THE HELP OF/ THE HACK ANGELS RECOVERY EXPERT.

I just want to take this moment to appreciate the effort made by THE HACK ANGELS RECOVERY EXPERT and his team in recovering my scammed Bitcoin . Have you by any means invested your hard earned funds or Bitcoin with an Investment and later you find out you have been duped, and you would wish to track down and recover your funds. I am recommending them to everyone out there who have been defrauded too by these fake Bitcoin investment platforms. You can reach out to them with the information below: at

WhatsApp +1(520)200-2320)

They helped me when I thought I had nothing left to do.

This is such a clear, no-fluff guide. Wish I’d seen it earlier. Gonna save this one 👏

Courtland, this is such a timely meta-skill. Prompting isn’t just about talking to LLMs — it’s about learning to think in structure and clarity. That’s the real founder superpower you’re teaching here.

Gold! Thanks for sharing , can we make products which eliminated any one of the limitations of chatgpt?

Would you say this applies across the board, or are there exceptions to every example, and some models are better at, say, multi-step planning than others? What about different LLMs, like the ones from ChatGPT versus the models from Anthropic, Mistral, and so on?

The thinking models avoid a lot of the iterative reasoning problems, which is what makes them great, but they have limits as well. I think o1 pro from OpenAI is still the strongest model I've used across the board, but it's the most expensive and the slowest. For code I like Claude Sonnet 3.7 MAX in thinking mode, and then if it gets stuck, I turn to o1 pro mode or Gemini 2.5 Pro.

🔥 Great breakdown, Courtland — especially around iterative reasoning and multi-step planning.

I’ve been building Vibe Village, a music app that runs live, AI-generated battles over SharePlay — and we use the ChatGPT API under the hood to power dynamic trivia, battle curation, and engagement strategies.

I agree that LLMs are amazing at transforming and pattern-completing (our trivia generation thrives here). But without strong scaffolding and external guardrails, they’ll confidently hallucinate or shortcut complex steps.

We’ve learned to:

Front-load context (and keep it lean)

Use RAG patterns for grounded song facts

Run AI generation behind-the-scenes, then verify before surfacing it in battle

LLMs are the creative engine — but humans still steer the ship.

Love what you're doing for the Indie community. 🙌

#LLM #AI #IndieHackers #ChatGPT #VibeVillage

Nice reminder of the limitations. The one I hate the most is the "Not Remembering" then just making stuff up to compensate which then leads to self-verification.

You can solve this by architecting memory into your system.

This is super helpful. I’ve been struggling with how to get consistent results, and framing prompts around strengths vs weaknesses makes a lot of sense. Thanks for sharing!

I used to get so frustrated with AI, pulling my hair out when it would forget key details or give me a half-baked plan. It felt like trying to teach a goldfish calculus.

Then it clicked: I treated it like a brilliant but hyper-eager intern. My intern, "smart AI tool for business," is lightning-fast but needs clear, step-by-step instructions. So, I break it down instead of giving the AI tool a huge project at once. "First, give me an outline." Then, "Now, write the introduction." Finally, the magic step: "Review your work and find three mistakes." This forces AI to slow down and self-correct.

For market research, I don't just ask for a business plan. I'll visit Accio and ask it, "Analyze real-time supplier data, then draft a sourcing strategy based on those facts." If I need creative ideas, I prompt, "What are three smarter alternatives to this approach?" You have to push it to think critically.

Ultimately, the AI isn't the boss—you are. You just have to learn how to manage it.

I would forget that LLM forgets the content of past conversations.

I tend to ask them to “bring back that code they implemented a while ago”.

AI is terminally positive. It seems no matter what hairbrained idea I have it says "That's a fantastic idea! Let's get started!" But it has inspired me to start poking at the edges.

I find coding now like walking a blood hound through a forest and letting that powerful sense of smell the dog has lead the way. My job is just to make sure the dog doesn't take a wrong turn and follow a completely wrong smell.

It's really the hardest part of vibing a complex app. Wrong turns. Everything will be going great and then all of a sudden you notice the code is a mess, and no prompt will fix the mess anymore, you have to

git checkout .and start over from your last save point. It's a lot like a roblox game. The name of the game is all about save points. I commit often. And I commit with just 1 word commit messages. Many times I go back several commits and branch from there.

Very interesting! Thanks for sharing!

I find the longer I interact with it the more I understand how to prompt it. Like it explained to me it can’t recreate an image based off the previous one, even if you say “use the same picture but…” it will make a brand new image. UNLESS you tell it to save the image as landing page mock up 1, then it can bring it up again

A lot of it just comes down to practice for sure. It's also helpful sometimes to watch how others prompt AI, might give you some ideas that are outside your norm.

Epistemic agency. I've had a vague intuition of this problem, but you hit the nail on the head. It'll make a page conform to your design, but it won't tell you your design is out of style if you don't ask. Thanks for this.

That's exactly it

That sounds really useful—having a quick “prompting cheat sheet” to glance at when things get frustrating is such a smart idea. It’s like keeping a list of salon treatments handy—once you’ve gone a few times, you already know what works best for you, but at first it’s nice to have the guide right there.

When we begun developing our platform with ChatGPT, we quickly understood that ChatGPT would not remember some essentials info in an earlier conversation. So we built a personal GPT and gave him all instructions. We updated these instructions while we were progressing, and this is how we solved the LLM memory bugs.

Thanks for sharing very useful!

I appreciate the insights shared in this article. It's a valuable resource for anyone looking to optimize their interactions with LLMs.

Love this. Thanks for sharing! Have you noticed certain changes as models get updated?

Hi Allen,

Thanks for sharing such a clear and practical cheat sheet on working with large language models. Your tips about structuring prompts and understanding AI strengths really resonate with us.

At Synphoria, we built Sofia — an AI emotional companion with unlimited memory that goes beyond one-off prompts. She remembers your entire conversation history, your emotions, and adapts over time, which helps make interactions more natural and meaningful.

This long-term memory lets Sofia pick up where you left off, ask how things have changed, and gently follow up if you don’t respond — almost like a true companion, not just a tool.

If you’re curious, you can try Sofia for free for 3 days and see how this persistent memory changes the AI experience: Synphoria(dot)app

Remembering has been one of the challenges I have faced with using AI. So I don't get into that loop, I make it a point of duty to reference previous conversations and prompt it to continue from that line of thought... Thanks for the writeup

Good One!

Good one! I've also experienced these things too, especially when you give lengthy details.

This is important since it's easy to forget the bad part when working with AI.

Also prompting it to take 10 seconds to think before answering has improved the quality of the output I’ve found

This is a really valuable resource. Knowing how to play to an LLM’s strengths can seriously improve both efficiency and results. Prompting well isn’t just about asking, it’s about guiding

Yep. Agreed. I had to make a custom LLM with a separate persona to tear down my posts. Because by default, LLMs are just affirmation machines. Making them give you useful feedback is quite a task.

Totally get that! LLMs do tend to be overly nice by default. Building a persona to challenge your ideas sounds like a smart move for real growth.

Use clear, specific prompts with context, define desired output format, ask step-by-step if complex and refine iteratively. This helps LLMs generate accurate, relevant and high-quality responses.

I have found a lot of people have clever prompts to mitigate these cons, at least to a degree. I hope we get to the point where, with enough individual interaction, it will understand our needs by anticipation.

It looks good. Thank you.

Nice summary — love how clearly this breaks things down.

I've noticed the "confidently wrong" replies way too often, especially when it cpomes to multi-step logic. Do you think there's a good prompt structure that helps LLMs avoid falling into that trap? Or is it just about knowing when not to rely on them?

This gave me ideas, thanks a lot.

Love the transparency. Just launched a free blog starter kit myself — this gave me ideas for outreach.

It is actually really good at remembering some things, but it does seem like the important stuff... the stuff that could have saved me a lot of time...not so much on the memory!

This is so interesting.

I've been using LLMs in various targeted ways for my client work (I'm a content writer) and this list is confirmation of so many things I've noticed. What's most puzzling to me is the "remembering" part; you'd think this would be first on the list of things that AI would ace.

But I see this over and over. If I'm planning out, say, a landing page and write a detailed prompt outlining the product/service, problem, solution, audience, industry, CTA, etc etc it'll offer a great first pass (or several). But as you issue corrections, make edits, add concepts, and change wording/structure, it may begin to forget things. You have to be really on the ball to check new drafts against old ones (which a good writer should be doing anyway).

Like you say, it's only when you explicitly ask/remind the LLM to do xyz that it will do so. A bit like getting a child with ADHD to clean up their room...they require regular check-ins.

Yes, that’s a common issue. AI can produce great initial content but often needs reminders to stay consistent as the conversation or edits continue. Regular prompts help keep everything aligned.

Understanding prompts is valuable, but will there come a day when they become obsolete? The rapid pace of change in the world feels overwhelming at times.

"Prompting is useful, but real progress will come when AI doesn't need to be prompted like a manual machine."

I have saved this for the future.

Thanks a lot

That's really useful, thanks for sharing

interesting!

Here’s a quick cheat sheet for prompting an LLM effectively: Be clear and specific, give context, define the desired format, ask one thing at a time, and use examples when possible. The more structured your prompt, the better the response. MBA programs thrive on clarity and well-framed instructions.

I love the insights that you mentioned here. Prompt can really be useful for founders when marketing ( building too, but I am a marketing guy ).

I working on building few prompt templates for marketing starter kit for founders to help them cut their time taken to figure out their landing page design using a simple prompt

Do you think LLM's are good to be used as systems that create a reader's digest out of multiple news sites and transforms the data in JSON format (or something similar)?

Since, this particular usage falls under the category incl. of transforming, understanding and completing patterns, I thought I can give it a try?!

Let me know what do you guys thing abt this.

Web Developer: By Riaz Uddin | Professional Web Developer & SEO Expert

From Bangladesh

This cheat sheet is a great resource for anyone looking to optimize their interactions with LLMs! I totally agree with the points about iterative reasoning and remembering limitations—those are definitely areas where LLMs can trip up. It's important to use prompt structuring and external context to help guide the model's responses.

Great list — couldn’t agree more with the importance of showing not just what you created, but how you got there.

One thing that’s worked well for me: framing each project as a story. I start with the challenge or goal (like a brand needing a refresh to appeal to Gen Z), then walk through the process with visual milestones — mood boards, early sketches, mid-fidelity mockups — and end with the final design in a real-world context (mocked up on a billboard, website, or packaging).

Also, don’t sleep on motion — even if you’re not an animator, simple before/after sliders, scrolling page demos, or screen recordings can really elevate how your work is perceived. It makes everything feel more alive.

Lastly, personal projects are underrated. They show your taste and initiative, and often end up being what people remember you for.

Curious if anyone here has added video intros or narrated walkthroughs to their portfolios — thinking of experimenting with that next!

Thanks for sharing

Thanks for sharing

I totally agree with the ‘evaluating AI responses’ part. Sometimes it gives answers, but they're not quite there because the prompt wasn't specific enough. I've definitely had to tweak prompts a few times before getting exactly what I need. Anyone else have a similar experience with LLMs and fine tuning outputs?

sweet thanks

This is a super helpful cheat sheet—thanks for sharing! It really makes a difference when you're working with content-rich sites. I've been using tips like these to fine-tune prompts while updating with the latest DQ items, prices, and images. LLMs are a game-changer when used right!

This is an impressive distillation and articulation of the patterns I've seen as well. Thank you for taking the time to prepare this!

remembering is something I totally agree upon

Really appreciate this breakdown — especially the distinction between “pattern completion” and “iterative reasoning.” I’ve definitely noticed that LLMs excel when the task fits a recognizable structure, but fall apart when asked to reason across multiple steps or reflect on their own logic.

This was Great!!!

Hi Allen! 👋

🔥 This cheat sheet is incredibly helpful — especially the parts on iterative reasoning and remembering limitations. I’ve seen similar challenges while building my own food directory site.

I run a restaurant menu website called The Wendy Menus, where LLMs help us summarize dish descriptions and auto-suggest related items — but we’ve learned that without strong prompt structuring and human verification, it can hallucinate or skip critical context.

We use LLMs mostly for:

Transforming long food descriptions into user-friendly summaries

Pattern Completion to suggest trending food pairings

Interfacing with customer queries in a natural way

If anyone's interested in seeing LLMs applied to a real food-based platform...

Thanks for the discussion and insights!

– lex23

I had the same experience with the descriptions of an online store dedicated to craft products made from tobacco boxes. I am a content creator and I thought GPT's visualization could be helpful, but I had to monitor the texts he wrote very carefully because he was making things up... It is helpful, but you have to monitor it.

Absolutely agree, Gilda — it really is a double-edged sword. GPT can generate incredible output, but when it comes to factual accuracy or nuanced product details, that human layer of verification is essential. I’ve had a few “creative hallucinations” myself while testing it on longer menu items!

Out of curiosity, how are you using it now for your content creation? Any workflow tips you’ve picked up along the way?

Thanks again for sharing your experience — it’s reassuring to know others are navigating the same challenges.

– Lexon

Interesting to read this. Im building my product promptperf.dev for something very similar but geared towards users who are building AI products and not individuals as testing which prompt at what configuration in what model will give the most accurate and consistent answer is pain.

I’ve mostly just been trying things without much structure — this gives a clearer way to approach prompting. Haven’t tested these yet, but a few definitely caught my eye. Keen to try them out soon.

This cheat sheet is super helpful! I've been struggling with prompting, and these tips really clarify how to get the most out of LLMs. Definitely gonna try some of these strategies.

very useful!

My marriage was restored and my husband came back to me he apologized for all the wrongs he did and promise never to do it again. A big thanks to this wonderful psychic for bringing my husband back to me.. I never really believed in magic spells or anything spiritual but a trusted friend opened my eyes to the truth about life. My marriage was heading to divorce a few months ago. I was so confused and devastated with no clue or help on how to prevent it, till I was introduced to Dr. Excellent that did a love spell and broke every spiritual distraction from my marriage. A day later my husband started showing me love and care even better than it used to be, he’s ready to talk things through and find ways for us to stay happy. It’s such a miracle that my marriage can be saved so quickly without stress. You can also contact him for help. Here his contact. Call/WhatsApp him at: +2348084273514 "Or email him at: Excellentspellcaster @gmail. com

Solid breakdown.

Prompting is 90% psychology, 10% syntax — and most guides miss that.

Framing, tone, and even imagined audience change everything.

Appreciate how clean and practical this cheat sheet is.

Very timely, I just came across a situation where LLM behaves like a stubborn bot :)

Do you have a template you use for prompts?? Like have you mastered the guardrails enough and then copy paste a framework with your input, or do you just one off every prompt based on what you're doing

awesome...useful

I have had come across a unique scenario where my Planner LLM and Executor LLM are deadlocked in a loop because, Planner is giving suggestions that Executor does not wanna execute and Executor is passing suggestions that Planner does not find plausible. It was an interesting situation. That's how I came to know that iterative reasoning is not its forte.

I like what you wrote about the deficiencies in Complex Decision Making. It can appear confident about a decision recommendation but you have to challenge it and ask "why". Follow your own decision frameworks.

This was Great!!!

especially the part about starting at the goal and backfilling.....

Great resource! Mastering effective prompting unlocks an LLM's full potential.

I'm forever trying to refine my prompts so thanks for this.

Obrigado pela ajuda.

Helpful thanks.

Helpful thanks. My product uses AI heavily for summarisation and triage. Crafting the perfect prompt took me ages to refine.

So good! Totally agree. The planning deficit is huge.

Thanks! Multi-Step Planning (start at the goal and backfill a plan) was the most valuable reminder for me here.

merci le gros!

Sounds like a solid approach! Mastering effective prompting is like learning a new language—once you get the hang of it, everything flows much more smoothly. Got any key insights from your cheat sheet that stand out the most?

using the cheatsheet to prompt an LLM to comment on the cheatsheet... inception.

It depends on the perspective. LLMs are great for automating tasks, enhancing productivity, and enabling new innovations, but they also pose risks like misinformation, bias, and over-reliance on AI. The key is responsible use and human oversight.

I don't know if this is the right place to ask but do llm.txt work and are they useful when put in websites?

Hey,

Thank you for sharing your insights. The first page / cover page perfectly sums up and matches up with what I have personally experienced while using top AI models.

Specifically that part of iterative reasoning. It requires a good amount of thought process and understanding of what it is you want to achieve. I find it best when it's tackled step by step rather than going at it one whole step.

I think LLMs are great when working with data or any structured entity (like source code or text). Other than that, I've always found it a struggle to get good responses.

Download and study, thank you very much

Nice

Useful, downloaded, thanks for sharing

This comment was deleted 4 months ago

This comment was deleted 8 months ago

This comment was deleted 5 months ago