Everything you need to know about DeepSeek and R1

The Chinese company has tanked U.S. stocks and embarrassed investors with its low-price, low-investment reasoning model.

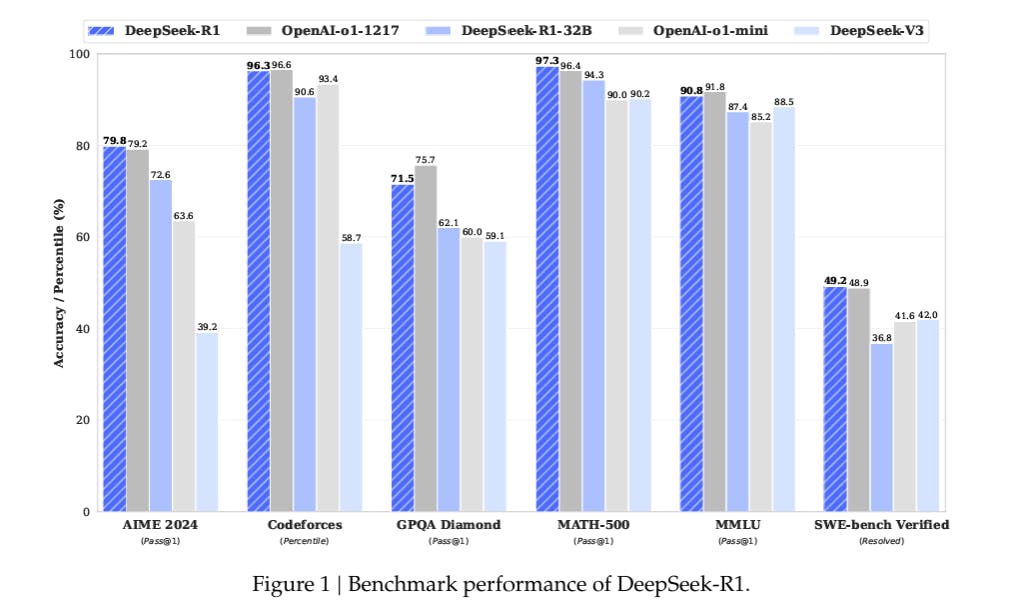

DeepSeek's R1 model has disrupted U.S. AI stocks by offering low-cost, high-performance reasoning.

Founded by Liang Wenfeng, DeepSeek aims to advance AI without focusing on commercial gain.

Indie hackers can access R1 via open-source models or a low-cost API for innovative applications.

Everyone and their mother is talking about DeepSeek: a small Chinese AI company that's managed to build a powerful reasoning model at a fraction of the cost of its better-known rivals.

That model — R1 — has sent the tech market reeling. Investors are questioning why the U.S. is pouring so many billions into AI infrastructure. Nvidia, whose best-in-class chips are fuelling hungry AI tech, has seen its share price tumble since R1's release last week.

As if that weren't enough, DeepSeek has since launched a family of image generation models that appear to outperform DALLE-3.

So who's behind this company and how have they made such a splash, so fast? And most importantly — how can indie hackers use DeepSeek's tech to boost their own products?

Who's behind DeepSeek?

DeepSeek was launched in 2023 by Chinese engineer and hedge-fund founder Liang Wenfeng. He grew up in a small city in Guangdong in the 1980s before studying at Zhejiang University in the eastern area of Hangzhou, according to state media.

Liang has been interested in AI for a long time. In 2015, he co-founded AI-focused hedge fund High-Flyer Quant, which went on to build its own powerful supercomputer. The firm is the sole owner of DeepSeek, which Liang launched both to advance AI research and to push Chinese innovation forward.

He told tech website 36Kr last year: “We often say there’s a one or two-year gap between China and the US, but the real gap is between originality and imitation. If this doesn’t change, China will always be a follower."

DeepSeek is a small Chinese business made up of around 140 engineers and researchers. It doesn't appear to be financially backed by the Chinese government, but it did become operational roughly in line with a change to national regulations.

It's a research-focused endeavor and doesn't operate for commercial gain. Its models are open source and its API is relatively cheap in part because the firm wants outside companies to build on the tech and push its boundaries.

How did DeepSeek make R1 so cheaply?

DeepSeek says it spent just under $6m on R1 — a fraction of the $100 million to $1 billion spent on rival U.S. models. It's not clear exactly how the firm was able to achieve so much on what seems like such a shoestring. We don't know what data the firm trained it on, for example.

But we do know the company likely had to work with less processing power than its U.S. rivals because of an embargo on high-end Nvidia chips. Although it purchased many before this blockade kicked in, it's likely to be using lots of "H800" chips, which are a less powerful version of the company's mighty H100s.

A technical report on R1 and its companion models spells out how the firm developed the family, giving us insight into how the firm did so much with so little.

Its competitors tend to use "supervised fine-tuning" (SFT), whereby researchers feed the model carefully selected data designed to teach it to process queries step-by-step.

DeepSeek instead used "reinforcement learning" to train the model. Researchers gave it a set rule system to follow, alongside a reward system to recognise accuracy and certain formatting.

An early version of the model given no SFT (R1-Zero) spontaneously reflected on its own "reasoning" during this process — an exciting step for its creators. But it also offered pretty unpredictable results.

Researchers combined reinforcement learning with a baseline of supervised data to produce the R1 model DeepSeek released last week.

How can I use R1 as an indie hacker?

Whether you're a developer or not, there are plenty of ways to experiment with R1.

Most exciting for technical founders are a suite of "distilled" models released alongside the full version of R1. These are open source models from Meta and Alibaba that R1 has massively improved. Best of all, the smallest can be run on a regular laptop.

You can download them all via the HuggingFace platform.

If you're not looking to run a model locally, but you do want to try out R1 in your own apps, you can access it via a low-cost API. For now at least, this offers an edge on OpenAI's competitor o1 as it's a fraction of the cost. But bear in mind OpenAI is going to release the next-generation o3 model soon, which may see its other prices fall.

You can read the full pricing and specifications for R1 here.

Lastly, you can use DeepSeek's R1 chat interface in the same way you would ChatGPT. It's completely free, so you don't need to sign up for another $20-a-month subscription. But it does limit the number of messages you can send and receive.

You can chat with DeepSeek here. Or download the app in the Apple or Google Play store.

Although its performance is impressive, DeepSeek still makes mistakes and hallucinates, and it's subject to censorship laws that make it tricky to get solid answers about many queries related to China's government and history.

Like any AI model, you shouldn't feed it sensitive information like user details or API keys. It's important to remember its servers are based in China, so everything you feed to the model will be too. Consider whether that might have any relevance to regulations in your home country (and to your own privacy policy).

Solid overview of DeepSeek and R1. I appreciated how you broke down what actually matters (architecture, performance, and use cases) instead of the hype. This makes it much easier to understand where they fit compared to other models.

If you'd like to try AI-powered marketing, I recommend Amplift. ai. It can help solve problems you encounter in marketing, including SEO, AEO, writing, and more.

Now that more people have dug through the research papers, details are surfacing on the technical breakthroughs the DeepSeek team achieved. Here are a few of them:

8-bit Floating Point (FP8) Training: This uses a low-precision but highly efficient system to save memory and boost performance.

Multi-token Prediction: The AI model predicts multiple tokens at once instead of just one at a time, which doubles inference speed.

Multi-head Latent Attention (MLA): Compresses key-value index data to save VRAM and enhance efficiency.

Mixture-of-Experts (MoE) Model: DeepSeek-V3 has 671B parameters, but only 37B are active at once, making it run on consumer GPUs instead of expensive H100 GPUs.

(I got this from a great post from Jeffrey Emanuel. If the items above don't make sense to you, I highly recommend reading Jeff's explanation.)

Fantastic, thanks for sharing this. I didn't know R1 was based on Llama and Qwen, but it makes sense. It that's the case, I don't see why the improvements above couldn't be incorporated into the more 'mainstream' models, improving performance across the board. I welcome this, and celebrate open source once more.

Not only can the improvements be incorporated, I'm pretty certain they will. The investors who've poured billions in capital into the frontier AI labs will storm the gates otherwise. And yeah, one of the really magical things about the DeepSeek breakthroughs is how open they are about what they innovated, how they did, and why. So the ideas behind the most of the breakthroughs can be architected everywhere, even if there are implementation-level differences.

Great Katie!

Really impressed by what DeepSeek has done with R1 - great to see more accessible AI solutions emerging in the open source space! :-D

The biggest breakthrough brought by DeepSeek is breaking the Scaling Law of large models, which has also benefited indie developers by lowering the cost of using LLMs for applications. I hope similar breakthroughs can be made in the field of diffusion models (image and video generation), as the inference costs for image and video generation are currently much higher than for LLMs.

Thanks for the insightful post!

This is insightful, Thank you for sharing

Was the cover image generated with deepSeek?

Great Katie, Interesting numbers on DeepSeek

Great Katie!

That you for posting such an insightful post!

Thanks for the insightful post, greatly appreciate it. Woud love to hear from others if they are able to switch easily to OpenSeek for their projects and what gaps they are able to see.

Wild how a 140-person team in China just made Wall Street panic with a $6M model. Meanwhile, I still need a $6 coffee before my brain can reason.

Curious if anyone’s already tried running the distilled R1 models on a regular laptop — do they actually work, or will my poor MacBook start smoking?

Really appreciate this deep dive—DeepSeek and R1 have been popping up everywhere lately, and I’ve been trying to wrap my head around what actually sets them apart. The speed and capabilities are wild, especially considering how quickly this space is moving.

It does make me wonder how indie founders like us can realistically keep up or even leverage these tools to build something meaningful without getting lost in the noise. Anyone experimenting with either of them for actual product builds?

This kind of post is gold—thanks for sharing!

Totally agree—the pace is honestly a bit dizzying. One thing that stood out to me with DeepSeek/R1 is that they feel less about chasing raw model novelty and more about practical leverage (cost, speed, reasoning quality) for real products.

For indie founders, I think the edge isn’t “keeping up” with every new model, but picking one capability (reasoning, long-context analysis, code gen, etc.) and building a tight workflow or vertical solution around it. The model becomes infrastructure, not the product.

Curious though—are you thinking more about using them as a drop-in replacement for existing LLMs, or exploring something R1-style reasoning enables that wasn’t feasible before?

I know it, I am in China

While it's been a while since the release, still very helpful. Thank you!

DeepSeek's R1 model is undoubtedly a game-changer, demonstrating that significant AI advancements can be achieved with relatively modest budgets. It's inspiring to see how innovative approaches can disrupt established markets and expand access to advanced technology.

DeepSeek's R1 model is really amazing. Using reinforcement learning to cut costs significantly is really impressive! But with chip restrictions, how can they boost computing power in the future? In open - source cooperation, how to balance sharing and keeping their own technology confidential while still making the model better?

R1’s low-cost, high-performance model is a major disruptor in the AI space, raising big questions about efficiency and investment in AI development. The accessibility of its open-source models and API makes it a powerful tool for indie hackers. Excited to see how this competition pushes innovation forward!

Great breakdown, thanks!

DeepSeek's R1 model is shaking up the AI space by offering a powerful reasoning model at a fraction of the cost compared to U.S. competitors. With a development cost of only $6 million, R1 utilizes reinforcement learning instead of traditional supervised fine-tuning, allowing it to deliver high performance on a smaller budget. Indie hackers can access R1 through open-source models or a low-cost API. While it's a game-changer for affordability, users should be mindful of data privacy and censorship concerns due to DeepSeek's operations in China.

DeepSeek’s R1 is a game-changer-powerful, affordable, and accessible. Its $6M build cost proves smart training beats brute force. With a low-cost API and laptop-friendly models, indie devs have a new edge. Some limits exist, but its innovation is undeniable.

DeepSeek AI is an advanced artificial intelligence platform designed to push the boundaries of deep learning and natural language processing. With cutting-edge models, it enables powerful insights, seamless automation, and intelligent decision-making across various industries. Whether for research, business, or creative applications, DeepSeek AI delivers unparalleled efficiency and innovation . 🚀

The fact that Deep Seek, led by Liang Wenfeng, built R1 for just $6M compared to the "$100 million to $1 billion spent on rival U.S. models" is a stunning disruption of the AI landscape. Their research-focused approach of using reinforcement learning instead of traditional supervised fine-tuning, combined with making models open source and APIs affordable, aligns well with Liang's stated goal to advance AI without focusing on commercial gain.

The $6 million spent is on the last training run only.

DeepSeek's R1 model is indeed making waves in the AI community. Its low-cost, high-performance approach is impressive and could democratize access to advanced AI tools. However, it's important to be aware of potential concerns, such as the model's tendency to reflect certain biases or narratives. As with any AI tool, it's crucial to use it thoughtfully and consider the broader implications.

Its open-source nature and accessible API provide ample opportunities for developers and indie hackers to leverage this technology in innovative applications.

Recently, I came across a website that provides some valuable resources and guides on hosting solutions. If you're looking to enhance your services, you might find it helpful. Feel free to check it out here: it might give you some fresh ideas on improving the overall user experience!

Hey there! I totally get the need for a more adaptable approach to white-label hosting. If you're searching for a platform that offers robust hosting management with customization options, you might want to explore FreeCine. We provide tailored hosting solutions designed to meet your needs. You can check it out here:

Great read, Katie! DeepSeek's R1 is shaking up AI with its low-cost, high-performance approach. The reinforcement learning strategy is fascinating, and the open-source models are a big win for indie developers. But data privacy and China's regulations are key concerns. Excited to see how this competition unfolds—especially with OpenAI's o3 on the way!