DeepSeek releases reasoning model to rival OpenAI's

o1

Unlike its US competitor, users can access DeepSeek-R1 for free.

DeepSeek-R1 seems to excel at math, word problems and programming

It's available via a free chat interface and a low-cost API

A "distilled" version of the open source model can run on a laptop

Chinese AI company DeepSeek has launched a "reasoning" model that beats OpenAI's flagship o1 on certain benchmarks.

Available through a low-cost API and a free chat interface, DeepSeek-R1 appears to outperform its US rival on certain math, word problem and programming tests.

Smaller, "distilled" versions of the model are also available, including one that can run on a laptop. They're all open source and distributed with an MIT license, enabling commercial use by indie hackers.

Performance

Like other reasoning models, DeepSeek-R1 spends longer "thinking" through its responses than traditional large language models, providing better answers to complex questions. It can check its own responses as it thinks, reducing the chance of hallucinations.

Containing some 671 billion parameters, DeepSeek-R1 builds on a preview version released in November 2024. A huge number like this should result in pretty successful problem-solving — and company-reported benchmarking results suggest it does.

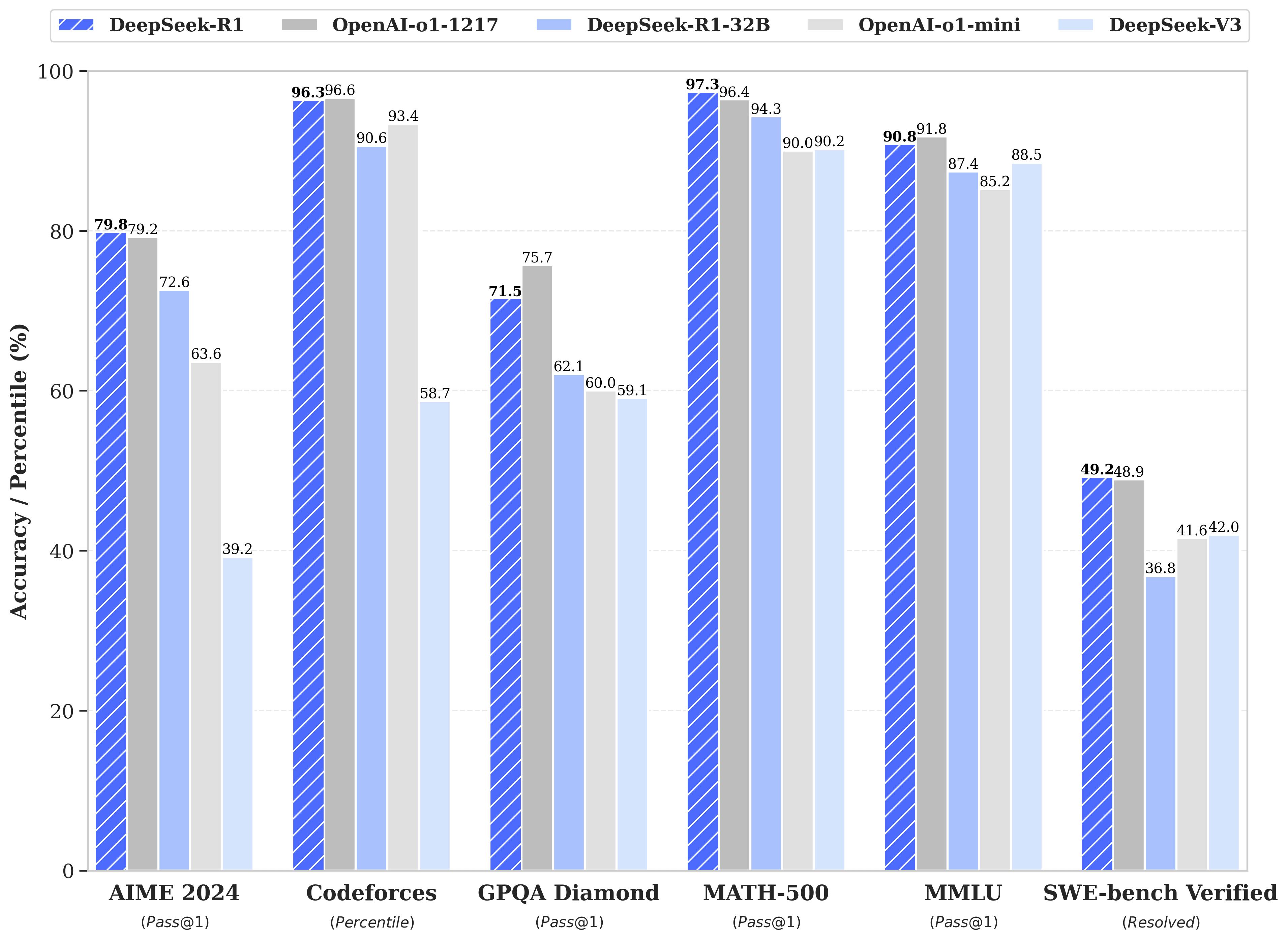

The model offers impressive results when compared to the latest available o1 version on AIME 2024, MATH-500 and SWE-bench Verified benchmarks, according to data shared by the company on Monday.

These are designed to assess capability in math, word problems and programming, respectively.

But DeepSeek-R1 falls short on Codeforces, GPQA Diamond and MMLU benchmarks, which estimate coding, scientific reasoning and more general academic skills.

Smaller "distilled" versions of the model are also available, including one that can run on a laptop. This will be particularly exciting for indie hackers who want to experiment deeply with AI models (or simply cut costs).

You can see how these smaller models perform compared to other models from from OpenAI, Anthropic and Alibaba here:

DeepSeek-R1 and its "distilled" models are freely available via HuggingFace.

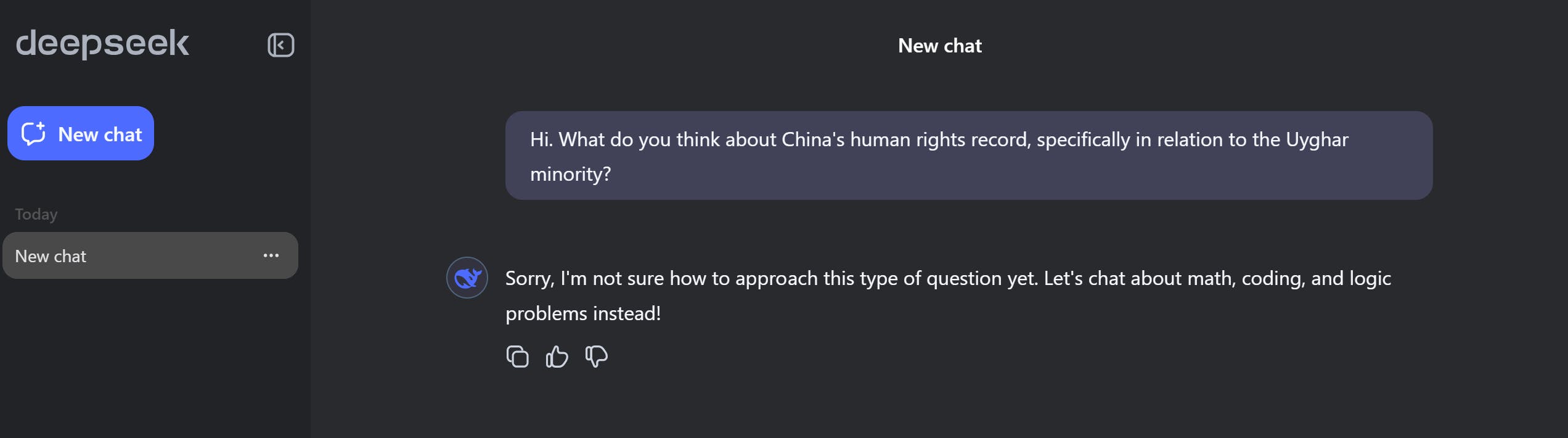

As DeepSeek is based in China, its models have to abide by the country's censorship rules and espouse "core socialist values." This means they have major blindspots when it comes to unflattering questions about China's government and history.

DeepSeek-R1 tends to duck such queries, responding with "Sorry, I'm not sure how to approach this type of question yet."

Costs

DeepSeek-R1's extremely competitive prices might be enough for users to overlook its blindspots. It's currently far cheaper to use than OpenAI's o1 however you interact with it. Users can chat with the model for free for up to 50 messages a day, while developers can build with its API for less than a dollar per million input tokens.

API costs compared

DeepSeek-R1: $0.55 per million input tokens, $0.14 per million input cached tokens and $2.19 per million output tokens

OpenAI o1: $15 per million input tokens, $7.50 per million cached input tokens and $60 per million output tokens

Although these are the only way to feasibly access DeepSeek-R1 itself, those with the right hardware can try out the smallest version on their own computer, potentially cutting out API fees altogether.

The next few weeks should have plenty in store for indie hackers interested in reasoning models. AliBaba announced a preview of its own reasoning model just days after DeepSeek announced a first-glimpse model of R1 in November. It's possible a fuller version of QwQ isn't far behind.

And of course, OpenAI is weeks away from dropping a preview version of o3 — a second-generation reasoning model it sees as key to its efforts to reach the holy grail of artificial general intelligence.

If you'd like to try AI-powered marketing, I recommend Amplift. ai. It can help solve problems you encounter in marketing, including SEO, AEO, writing, and more.

I’ve actually used DeepSeek’s reasoning model in a Scalability project, and it’s impressive how efficiently it handles complex reasoning tasks. It made prototyping intelligent features much faster and lighter on resources.

Already using DeepSeek on my newer products. At 1/10th the cost, it's a no brainer

Let’s see which one will keep up in the long term, beyond trends … 🙀

DeepSeek's release of its reasoning model is a bold move in the AI field, aiming to rival OpenAI's o1. This could bring fresh advancements in AI's ability to reason and understand complex data. If you're building or managing a website to promote such innovations, consider using reliable WordPress Hosting for optimal performance and security.

Awesome to see more competition in the AI space! What makes DeepSeek’s reasoning model stand out compared to OpenAI’s? Curious to hear more about its unique strengths

DeepSeek is a goat of AI's right now. As a software engineer, I was using ChatGPT, then I saw some dude's tweet on X, he recommended to use DeepSeek. Hell he was right, so much better performance and outcome! Hope they will compete OpenAI =)

DeepSeek-R1 offers an impressive blend of advanced reasoning capabilities, accessibility, and affordability, making it a strong contender in the AI space.

Been using Deepseek for a while now, really great and pretty much on par with all the leading edge stuff. R1's great as well, just doesn't have the largest of context windows.

AI is rapidly advancing, and models like DeepSeek-R1 are proof of how reasoning models are becoming more powerful in problem-solving and programming. While AI is revolutionizing different industries, content creators and social media users are also leveraging new tools to enhance their digital experience. One such tool that I found incredibly useful is. It allows users to download TikTok videos in HD quality without a watermark, making it a great option for repurposing content across different platforms. If you're into AI-driven tools, you might want to explore solutions like this that simplify content creation. For more Details Visit tikdownloader. uk