Google I/O 2025: All of Google's AI-related

announcements

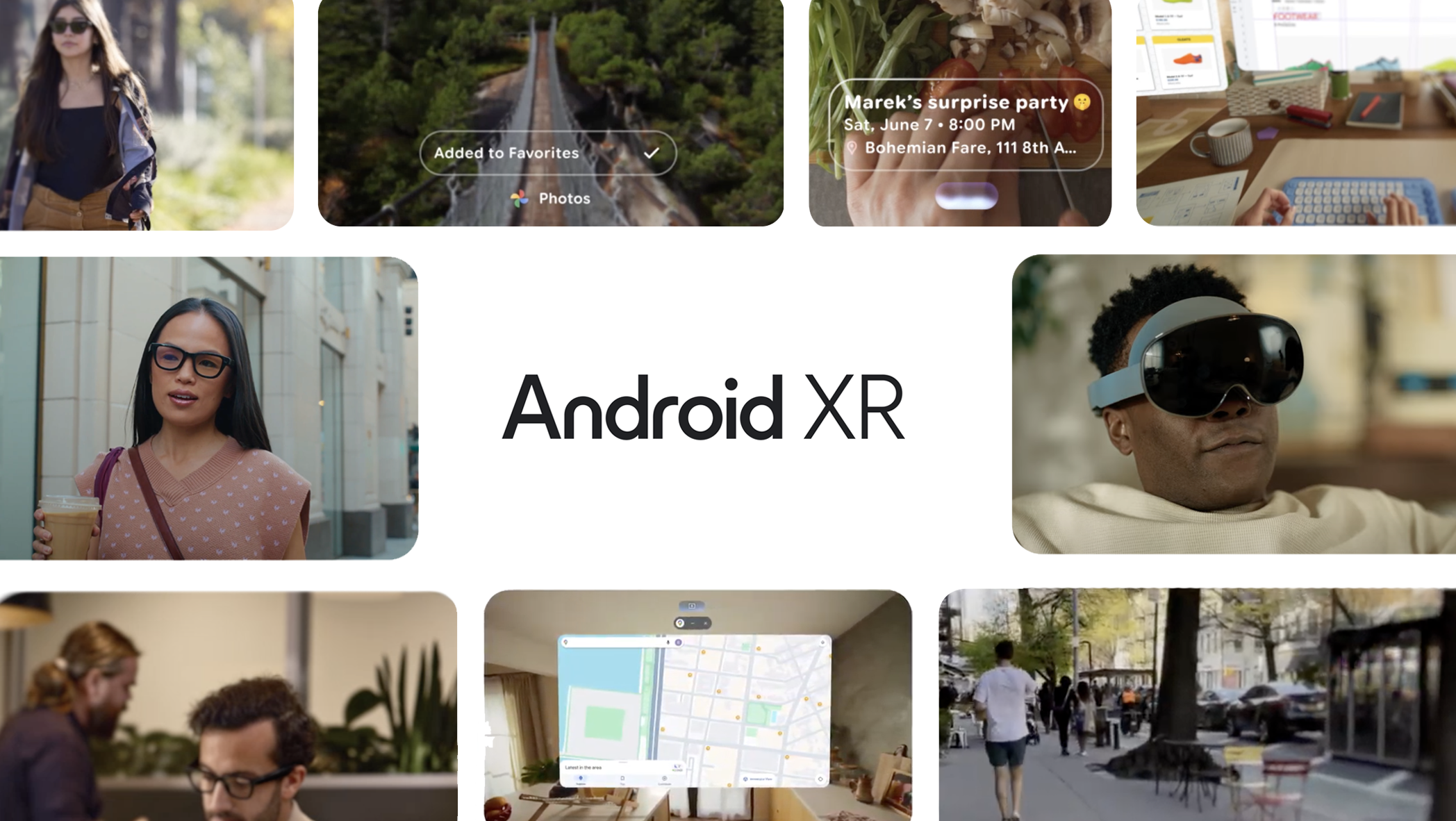

Android XR smart glasses. AI video that includes audio. New subscription models. And more.

Google held its I/O 2025 developer conference on May 20-21 at the Shoreline Amphitheatre in Mountain View. This year's event focused heavily on AI, with updates to Gemini models and new generative AI tools.

Gemini 2.5 Updates

Google showed off some cool new tricks for Gemini 2.5 during the keynote. Here's what caught our attention:

Deep Think

Google's Deep Think for Gemini 2.5 Pro is their answer to similar features already offered by OpenAI and Anthropic. Like its competitors, Deep Think gives the AI extra time to work through multiple solutions before landing on the best answer.

The benchmark results show strong performance:

Topped the LiveCodeBench competition for coding ability

Achieved exceptional scores on the challenging 2025 USAMO math exam

Outperformed OpenAI's o3 model on MMMU tests with an 84% score on multimodal reasoning

Google plans to offer this feature to AI Ultra subscribers once they complete their safety checks.

Thought Summaries

Google's making it easier to see what's going on inside Gemini's "brain" with a new feature called thought summaries in the Gemini API and Vertex AI. Similar to chain-of-thought implementations already available from OpenAI, Anthropic, and Deep Seek, this feature organizes the model's reasoning into a structured format to help developers.

Organizes model's raw thoughts into structured format

Includes headers and key details

Makes debugging AI interactions easier

Generative AI media models

Google introduced new AI media creation tools at I/O this year. The updated models can now generate everything from images to videos with sound.

Veo 3

Veo 3 adds sound capabilities to Google's video generation system, moving beyond silent clips for the first time. This update allows for more complete video content creation.

Generates videos with audio

Creates sound effects, background noises, and dialogue

Available with Google AI Ultra subscription

Imagen 4

Imagen 4 updates Google's image generation with improved detail rendering. The focus appears to be on more accurate fine details and typography.

Latest image generation model from Google

Improvements in rendering fine details like fabrics and animal fur

Better typography rendering compared to previous versions

Creates images in various aspect ratios up to 2K resolution

The model supports both photorealistic and abstract styles and is now available in the Gemini app, Vertex AI and Workspace apps.

Flow

Flow combines several AI models to simplify video production. The tool aims to reduce the technical barriers to creating video content.

Built on Veo, Imagen, and Gemini models

Creates cinematic clips from text descriptions

Handles scene creation, transitions, and sound effects

Google is partnering with director Darren Aronofsky on AI storytelling venture "Primordial Soup" to explore creative applications of these tools.

Android XR

Google presented its spatial computing platform for smart glasses and headsets. The system integrates Gemini to power augmented reality experiences.

Smart glasses can overlay UI onto real world

Show notifications and provide turn-by-turn directions

Support live streaming capabilities

Google announced partnerships with eyewear brands like Gentle Monster and Warby Parker. Xreal's Project Aura is expected to be the first Android XR smart glasses to market.

Google Workspace and Chrome

Gemini is expanding into Google's core products with integrations across Workspace apps and Chrome.

Gemini in Chrome: AI browsing assistant that helps understand page context

Gmail: Getting personalized smart replies and inbox cleaning features

Google Docs: Adding "grounding" for Gemini responses to improve context

Google also announced Imagen 4 integration coming to Docs and Slides. The Chrome browsing assistant will be available to Gemini subscribers in the US this week.

AI Subscription Models

Google introduced a two-tier pricing model for its advanced AI features.

Google AI Pro: $19.99 per month with basic access to Gemini features

Google AI Ultra: $249.99 per month for highest level of access

The Ultra tier includes Veo 3, Flow, Gemini 2.5 Pro Deep Think, and higher usage limits in NotebookLM and Whisk.

SynthID Detector

Google released a verification tool for identifying AI-generated content.

The SynthID Detector checks uploaded content for Google's watermark, helping differentiate between human and AI-created media.

Appreciate this roundup! Didn’t have time to watch everything. Super helpful breakdown 🙌

Solid that google is making waves in the AI boom... do you guys think it will compensate if their search revenue evaporates?

No, but also I don't think they have a choice. Generative search is going to eat traditional search whether they like it or not, so they might as well get a piece of the new action.

Yeah, but people may still perform lots of the generative search through google still if they put gemini into it.. If they make search become as good as an LLM but poised specifically for search they may still keep it up.. who knows

Google is really leaning into the whole 'AI can do everything!' vibe

Wild how fast Google’s pivoted from “AI features” to “AI as the interface.” Everything’s blending into that invisible layer between user and data - the real power shift isn’t in the tools, it’s in who controls that layer.

The Veo + Flow combo is wild if it works the way they claim. Text-to-video with audio plus scene transitions? That’s not an update, that’s a whole new content pipeline. Curious how it holds up in real production workflows.

This is a really exciting roundup — it’s clear Google is going all-in on AI this year. The Deep Think and Thought Summaries features sound especially promising for developers who want more transparency and control when working with large models.

I’m also curious to see how Veo 3 performs in real-world creative projects — adding sound generation could be a big step forward for AI video tools.

The pricing for AI Ultra feels steep, but it makes sense given the new capabilities. Overall, it’s impressive how Google is connecting everything — from Workspace to Android XR — into one unified AI ecosystem

Really enjoyed this write-up 🙌 — it’s wild how small AI projects, when focused, can actually bring in consistent revenue. What stood out to me is how each app solves just one clear problem (journaling, resumes, landing pages, SEO) but does it really well.

I’ve been experimenting with similar “vibe coding” projects, and one thing that helped me was building fast prototypes and testing prompts in real use cases. I actually documented some of that process over at aiwebix , in case anyone here is exploring lightweight AI app ideas.

Love seeing more indie hackers share their journey—it feels less like building alone and more like co-creating with the community 🚀

agree

this is cool

Google updated that AI related content are now acceptable up to certain limit

The Veo + Flow combo is wild if it works the way they claim. Text-to-video with audio plus scene transitions? That’s not an update, that’s a whole new content pipeline. Curious how it holds up in real production workflows.

greate update from google

Appreciate you sharing the raw journey. I’m doing something similar (public waitlist + landing page first) and I’ve been debating how much to automate vs. do manually early on. Did you find early user feedback shifted your roadmap much?

that's really wonderful

Crazy how fast things are moving, the Deep Think and Thought Summaries stuff really caught my attention. Giving models more time to “think” and exposing that reasoning is a huge win for devs trying to debug AI behavior in actual products.

Gemini AI – Advanced model (Gemini 1.5 Pro) with better memory and integration across Gmail, Docs, and Android.

AI Overviews in Search – Summarized answers powered by AI.

Veo & Imagen 4 – New text-to-video and text-to-image generation tools.

AI Teammate & Gems – Customizable AI assistants in Google Workspace.

LearnLM & NotebookLM – AI tools for education and personalized learning.

TPU v6 & v7 – More powerful AI chips for enterprise use.

Ask Photos – Natural language search in Google Photos.

Android 15 AI – Scam detection and smarter features on-device.

Absolutely inspiring — thank you for sharing this so openly.

People says Google is the next google

The thing I read today that made the most sense.

hi i am a very serious contender.

Seeing the recent evolution in the AI sphere, I think Google is becoming a very serious contender. But personnally it scares me a bit because I Don't fully trust AI

Google I/O 2025 really shows how AI is becoming a core part of everything we use daily. Veo 3 is mind blowing!

Google I/O 2025 proves AI isn't just a feature—it's Google’s entire future strategy.

Google's AI announcements at I/O 2025 showcase impressive advancements, but integrating them into user-friendly products remains a challenge. Success depends on seamless execution and clear, accessible applications.

"Google I/O 2025’s AI advancements are mind-blowing—especially Veo 3’s audio-video generation! Speaking of top-tier audio, have you checked out? It’s my go-to for the best music, and with AI now crafting soundtracks, maybe Gemini will curate playlists next! 🎶🤖 #FutureOfMusic"

Sounds like Google's AI is taking us straight into the sci-fi realm, huh?

Google just announced features like Gemini's 'Deep Think' and 'Thought Summaries' to improve AI reasoning and transparency. As builders, do you think these kinds of features actually help you trust AI outputs more or do you see them mainly as developer debugging tools?

Google I/O really threw in a whole buffet of AI updates, huh? From Deep Think to the shiny new tools for video generation, it’s like they’re trying to make our lives easier and way more entertaining all at once.

i like it