I wrote a fiction book with GPT-3! AMA

So... there was some really stormy weather tonight in Barcelona, so instead of going out after dinner I asked the GPT-3 API to help me write a story.

In this post you'll find...

- The process I used

- The AI model I used (hint: not ChatGPT)

- How I avoided the early mistakes and common bugs such as endless loops or incomplete chapters

- And of course, I'm giving you the novella itself (as a free downloadable PDF, no opt-in necessary) so you can check out the result!

It starts with structure (chapter titles)

The premise starts with an experience I had last weekend at dinner with my friend David. He had this strange MasterCard concierge service that got us tables at overbooked places who really shouldn't have taken our reservations (and had blatantly told us "no" when we had called them directly).

I had joked to David that his concierge service was probably a super spooky organization...

So I asked GPT-3 to use this as the premise of a story.

Why not ChatGPT

As you can see in the screenshot, I don't use ChatGPT (it's down a lot of the time), and I use the OpenAI Playground instead:

https://beta.openai.com/playground

(you can access it with the same login)

Why I like the Playground better:

- It uses the same model, GPT3.5 (if you select "text-davinci-003" as the model)

- It's never down, because the "Playground" is for business users, whereas ChatGPT is for the general public (almost all of which are free users).

- The Playground basically uses your API key without you needing to figure out what your API key is and how to use it

- You can do more than ask questions and get replies, you can use it to complete missing text, etc

- You always know how many tokens (~words) you're using in your prompt and how many remain for the A.I. to reply.

(Language models are limited in memory space. GPT-3.5 can only take in 4,000 tokens (~3,000 words) when taking into account your request plus the answer. ChatGPT goes higher: ~6,000 words in a conversation, but has other limitations)

The synopsis

Here is how GPT-3 described this story when I asked it to turn my initial prompt into a synopsis for a novel:

When Sébastien and David discover the seemingly-magical powers of the concierge service offered by David's Mastercard, they are amazed.

However, their wonder soon turns to fear when they discover that the concierge service is connected to a powerful mafia organization.

As Sébastien and David dig deeper into the mystery, they uncover a criminal network that stretches across the globe, and they soon find themselves in the middle of a dangerous game of cat and mouse with the mafia.

With their lives on the line, the two friends must use all of their wits and courage to outsmart the mafia and survive.

Will they be able to escape the mafia's clutches, or will they become yet another victim of the criminal underworld?

After getting a chapter list and a synopsis for the story, I had GPT write each chapter for me.

Why ask for a chapter at a time

In the end, the book is about 7,000+ words. So this is just within the realm of what ChatGPT can handle, and just about 2 or 3 requests, in theory, to text-davinci-003.

However I think these models are just not good at writing long-form at all. Because the model is simply predictive of "what comes next", it is perfect for a word, very solid for a sentence, trustworthy for a paragraphe, good enough for a page or two, and completely disastrous for 10 pages in one go.

By having it generate the chapter titles first, and then asking it for one chapter at a time (with a good chapter title), I got good results because it was only trying to advance one "leg" of the story at a time, and it knew where it was going.

You can notice this in the book, if you catch that GPT-3 always tries to "land" on the chapter title as the theme of the action:

First trials (and failures)

Initially I thought, If I just used the GPT "insert mode", it would:

- not cost me anything as it's free in beta

- be really straightforward since I could simply hit

[insert]in-between each of the chapter headings, and be done

The result wasn't good at all.

How I ended up structuring my prompts

After a few tries, what worked for me was to have really detailed prompts, similar to what I use when delegating to my (human) colleagues:

- Start the prompt by telling it what I want.

I want you to write Chapter 7 of a novel, a mafia thriller. Each chapter is about 2,000 words. - Give it the synopsis so it has context

- Give the Chapter list so it knows what came before and what comes after

- Give it the (GPT-generated) summary of previous chapters plus the full text of the most recent 1 or 2 chapters (even

text-davinci-003, the most powerful model, is restricted to 4,000 tokens in context : "prompt plus completion" can't exceed ~3,000 words, so you can't feed it a whole book and ask for the next chapter) - Be super clear with each chapter's beginning and end

- Finish the prompt by telling it what I want.

In about 2,000 words, write Chapter 7 of this mafia thriller. Include dialogue and descriptions.

I let it improvize the first draft each time to see where the story went.

It was a wild trip. Clearly GPT hovers between thinking David and I are both pushovers for the mob, or badasses ready to take on anything. In the book's circumstances I think I'd end up somewhere in the middle, so I'll allow it.

Prompt and response length

For my chapters, I asked for a completion in about 2,000 words.

Because of how long my prompt was, I often only had ~1,500 tokens left for the AI to write the answer (so... 1,200 words maybe).

But:

- Most chapters ended up under 1k words anyway, however...

- Telling the AI that it had 2,000 words of "budget" made it write better with more detail than when I didn't specify a length, or made it shorter

Polishing the content (warning: spoilers!)

The first draft of some chapters was eerily good, and I took them almost verbatim. For others, I chose to ask up to three times, while prodding the AI to keep the threads I liked and discard others, and then I'd either choose one of the 3 timelines or combine them with minor adjustments.

As a note, I found these to be the main literary issues with GPT's fiction:

Note: Don't read this before the book, it might spoil the reading experience

- It really likes having two characters "look at each other in shock" or surprise, etc. Having 2 main characters in the story meant that it did that a lot.

- Whatever is the cliffhanger in the synopsis will come back to haunt you one to three times per chapter. I've had to delete quite a few "They had just entered a world of danger and intrigue."

- Characters go from "having no choice" (passive) one minute, to deciding "this is the only way forward" (active) the next minute. It's really the same thing, but GPT sees this as the only issue to any dialog or conflict.

- It doesn't know how to wrap up a story. Without guidance, the last few chapters always ended in the good guy winning and feeling proud, with a last paragraph typically being along the lines of

But for now, they had won. They had taken down a criminal mastermind, and they had saved the world.And that was enough.- or

He had won the battle, and he had won the war. - or

He had made a desperate gamble, and it had paid off.

All of these work fine for a summary, but in a book, it's trite.

- and of course, the dreaded loop of death...

The loop of death

Sometimes, if two similar events happen in a row, GPT gets stuck in an eternal loop. The reason is that GPT is only a "prediction" model after all, it tries to guess what word comes next.

So if it comes back to where it started, the loop becomes infinite (in this example, Sébastien must get furious as he gets thwarted every day in the same Groundhog Day manner):

In this case, I had to stop the completion, and write a better prompt.

Finding a title

Then, when all was said and done, I asked GPT to summarize all the chapters, and then I asked it, based on the whole book's summary, for title ideas.

So, there you go, all that was left was pasting the whole book into a word processor and getting it done as a PDF I could pass around to my friends!

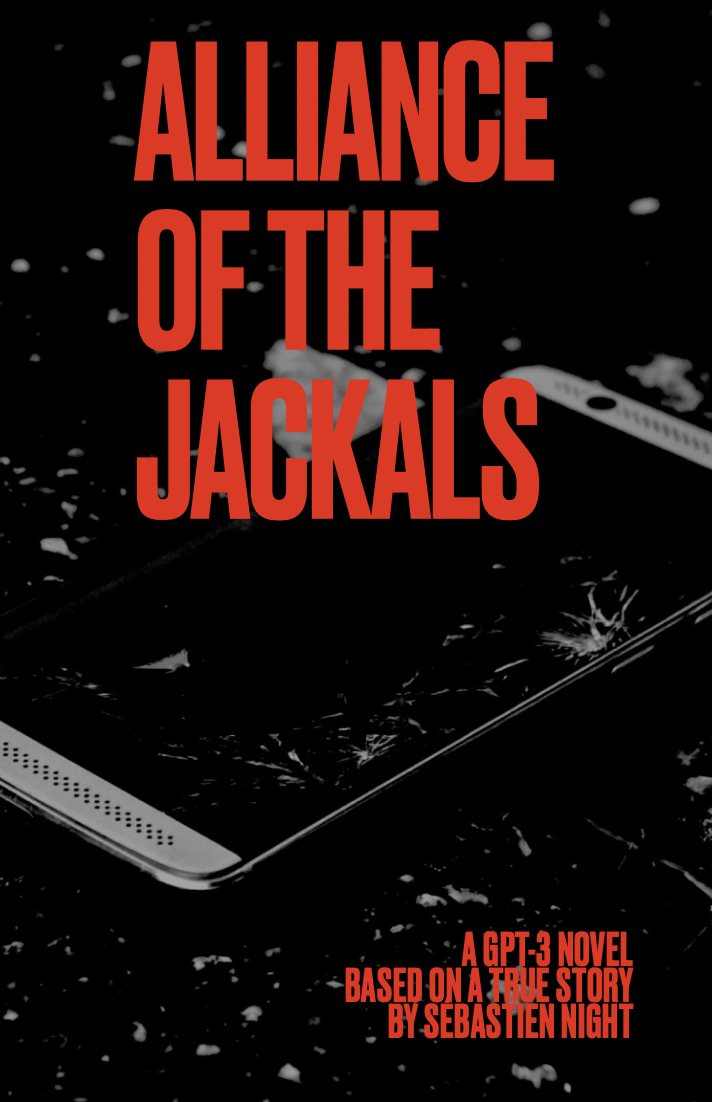

So... I've attached here my new book (at 7,000 words, it's actually more of a novelette ) :

Alliance of the Jackals (PDF, 1Mo)

It's a short read, and it's licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License. (You can share it, link it, embed it, forward it as you wish but not resell it).

It was a very interesting evening project.

I've had a "real" novel in the works for 4 years now, where I've struggled to put coherent chapters together, and here I've gotten 10 chapters and a complete story on a whim, based on a few prompts and some guidance. Wild!

Great work. All these AIs seem to be created to write books and fix code for inattentive programmers.

Yep. It's clear that AI has come a long way in recent years and can now do things we once thought were only possible for humans. It's not just programmers taking advantage of AI technology; many students now use it to complete their assignments. While I understand the appeal of using AI to get work done quickly and easily, I prefer using more traditional sources like https://ca.edubirdie.com/assignment-writing-services for help from professional writers. With these services, I know I can get high-quality writing that is free of plagiarism. When it comes to writing, the most important thing is the quality of the work, and that's why I prefer to use these kinds of sources rather than relying on AI.

Hi, we love this content! We are currently running a #newsletter specialized in #ArtificialInteligence named #GenAI. Can we feature this in our upcoming edition and give you credit and a link back to your profile? Reply using @goodbitsio and #GenAl if you’d like us to do so.

Hey @goodbitsio #GenAl, i'm actually working on an AI tool as well called Evoke and would love to have it featured in your AI newsletter.

We host open source AI models on the cloud accessible through API for devs and businesses building AI apps.

Feel free to link to the website or my twitter profile: https://twitter.com/TheRealEtch

LOL @RichardGao, master of the hustle

Thanks! I'm sure you've seen me on here quite a lot :D

Yes! I've looked at your website regularly, in fact, to see what models you've been supporting.

I'm surprised that you've started (and still seem to mostly run) with Stable Diffusion, instead of the LLMs like BLOOM or GPT-NeoX. Is there a reason for that? Do you think the market for API generation of images is larger?

The market for LLMs is actually larger as evidenced by the success of GPT-3 and ChatGPT. The main issue right now is that the LLMs that exist that are open source just aren't very good.

BLOOM 176B is the best at the moment, and even then, it underperforms GPT-3 by quite a large margin + hosting it requires quite a large amount of compute.

The biggest smaller model, GPT-NEOX 20b, performs worse than BLOOM.

With the existence of GPT-3 as an API with a relatively low price, this really isn't competitive unless you go the "uncensored" route (which OpenAI is shying away from doing), and that market, although booming, is obviously going to be smaller than the general LLM market.

For image generation, DALLE and midjourney has big disadvantages compared to some of its competitors + we have an open source easily alterable AI model: stable diffusion. This makes getting into that market much easier.

Until an open source good LLM releases, we are probably going to put LLM integration with Evoke on second priority. However, we do have plans to integrate Pygmalion, which is a conversation focused LLM that seems to outperform GPT-3 on certain tasks.

Curious, are you planning to do anything with image generation? Or is it mostly working with LLMs for you right now?

Actually yes, @RichardGao :

I am looking to do something very specific (a very custom style of thumbnail generation, turning one/several frames of face-to-camera video into a comics-style "avatar" of the speaker for the thumbnail) and I've been wondering whether Stable Diffusion can achieve it.

This would solve a problem that I have with my ingress videos, which might be blurry/in motion/not smiling/with dull colors and therefore are not great for thumbnails.

If this is possible with SD, then I'd be very happy to use Evoke's API for it as I don't want to self-host Stable Diffusion.

Do you think this is a task that an SD prompt could achieve?

You should definitely be able to do this with Evoke.

The main issue you might be facing here might be fixed with better prompting + using a different stable diffusion model.

We're getting some other models up on Evoke likely this week. If you'd like to stay tuned once we launch the new models, feel free to join the discord: https://discord.gg/sfvSMkBhMM

In addition, we're getting prompt tuning soon, so you should be able to get better images without excessive prompting

Also happy to answer any questions here or on Discord

Hi @goodbitsio (@Goodbits_io )

yes, you're welcome to feature this post, any part of its content, and even the PDF or parts of it as you wish (it's under Creative Commons licence) in your #GenAI newsletter, however, please credit me (Sébastien Night) and link to my website (Nuro: https://nuro.video/en/ ) ; not my Indiehackers profile.

Thank you!

Excellent post! Seen a lot of people writing fiction with ChatGPT/GPT-3 but rarely any guides on it

Thank you! I just updated the post with more info on my prompts, and why I used GPT-3 but not ChatGPT.

I also added a list of caveats about GPT's fiction-writing.

Your comment made me want to make the post even more useful. I hope it helps.

Sébastien