From 4 hours to 4 minutes: curating a newsletter withAWS Claude 3 and GPT-4 with under $0.19 a day (Part 2)

by Joao Vitor MartinsIn part 2 I detail how I managed to reduce the curation time from 4 hours to 4 minutes.

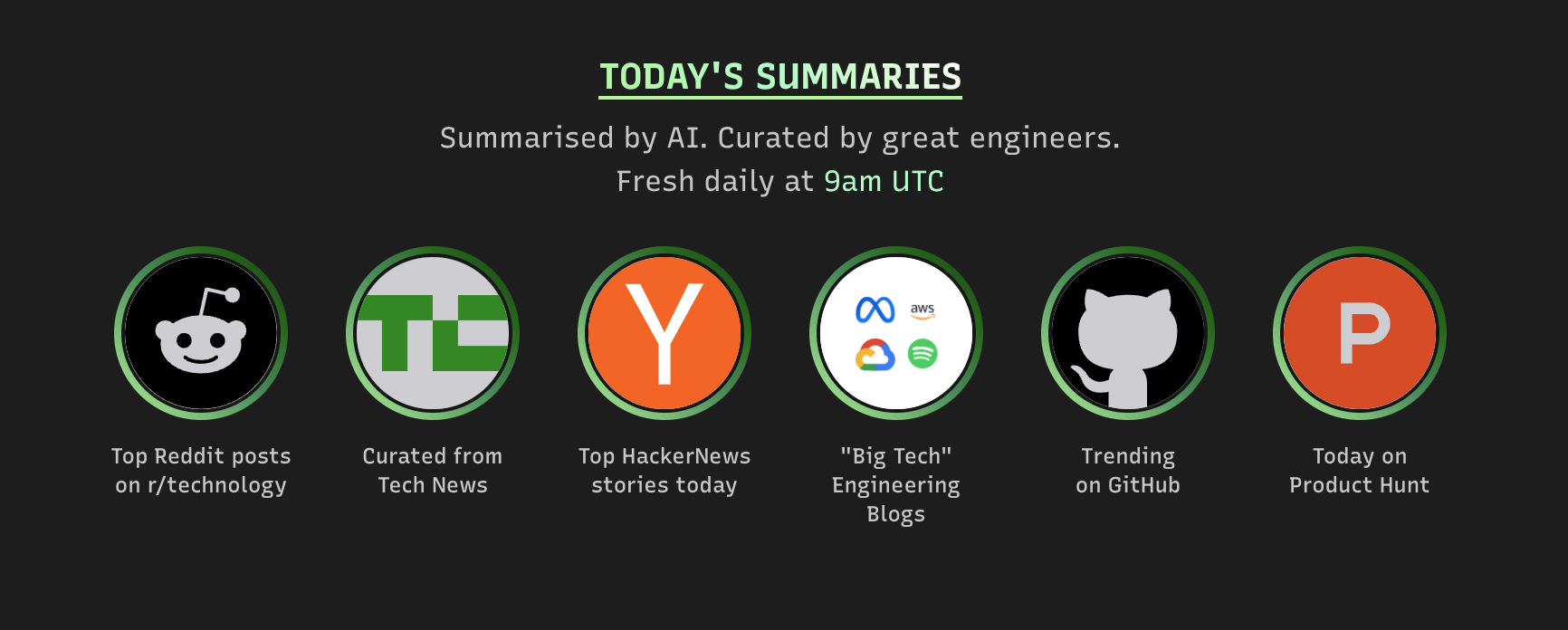

I start from the raw articles and RSS feeds of the selected sources and from there I do five steps to automate the daily news as stories and the newsletter out every week, summarising data with AWS Bedrock (with Claude 3 Haiku) and OpenAI’s GPT-4. Here is how:

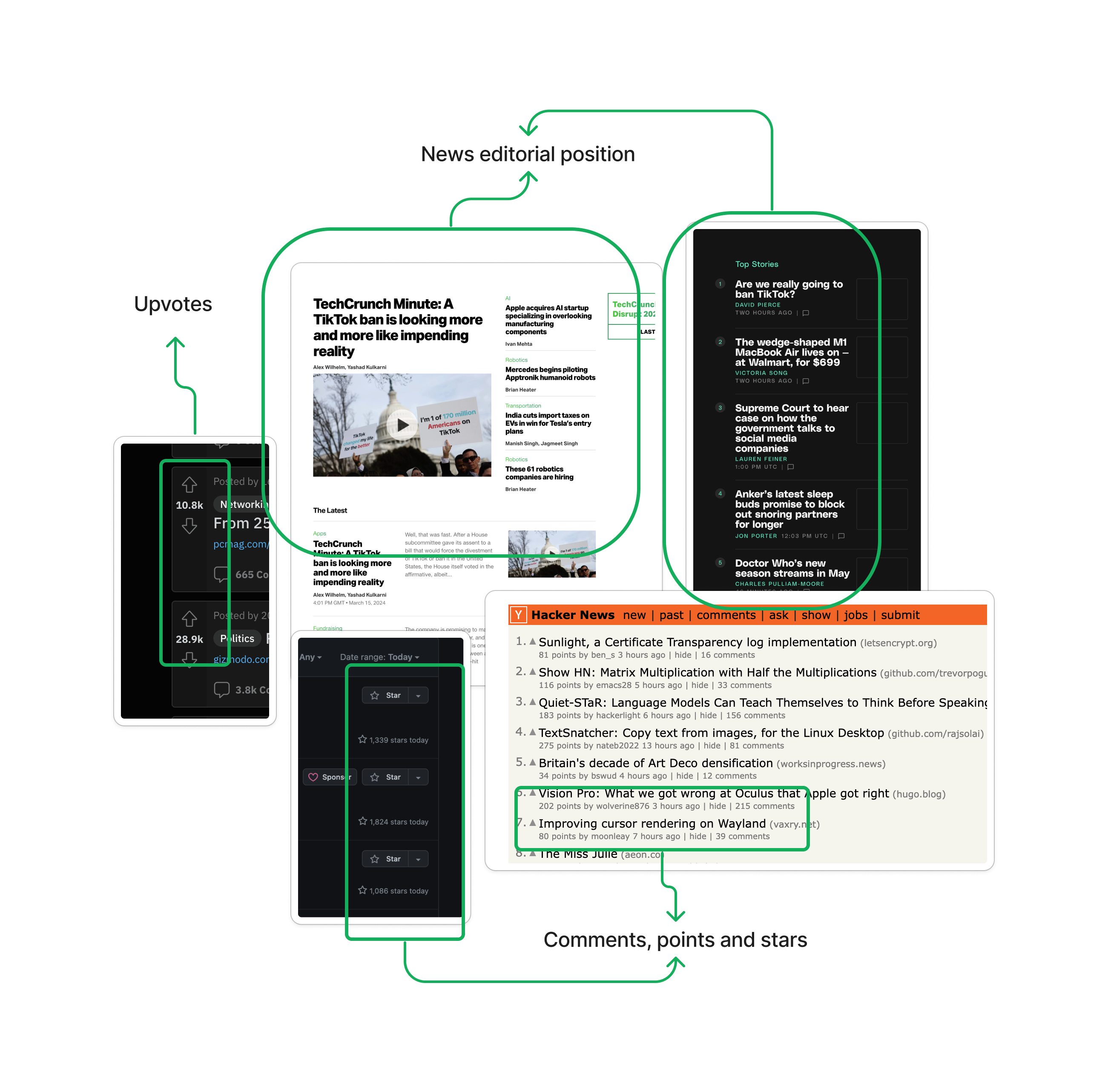

Step 1. Ranking: selecting the top 10 articles from each source.

A few RSS feeds would have the number of comments, upvotes, likes or stars, which makes ranking much easier. Others would use page position to highlight news pieces or have their own rankings in their main page. I combine this into a function that ranks the articles by relevance and importance based on what the community voted for and/or the editorial position choices.

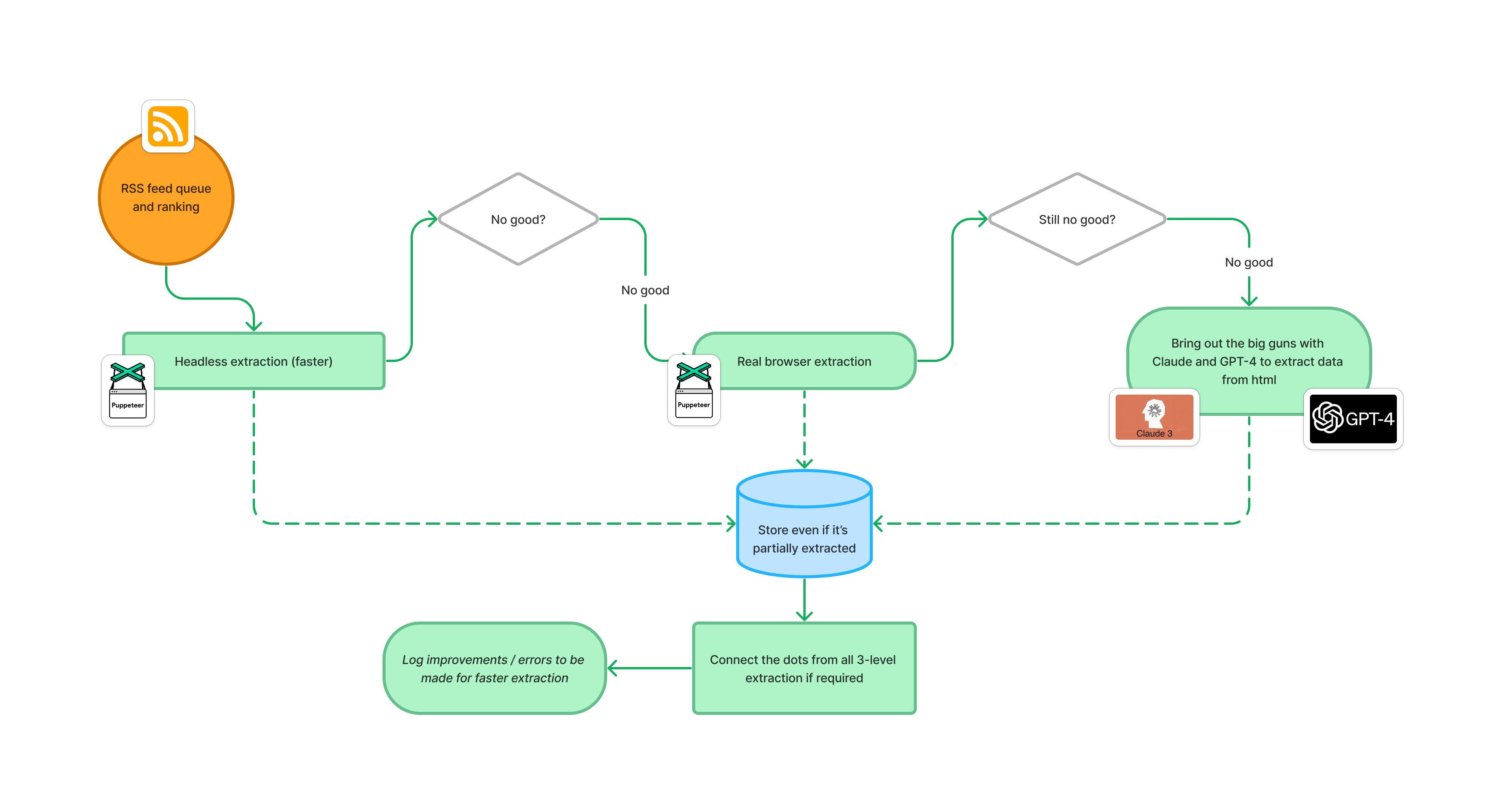

Step 2. Enriching: gathering more relevant data from each one of the articles.

From the top 10 articles of each source I go run through an enricher which will try to extract the main image of article, credits, authors and the full content if possible. It also extract main topics and categories of the article which are useful in the next step.

This step has a 3-layer fallback which includes some programmatic extraction and some scrapping using LLMs. Here is a diagram:

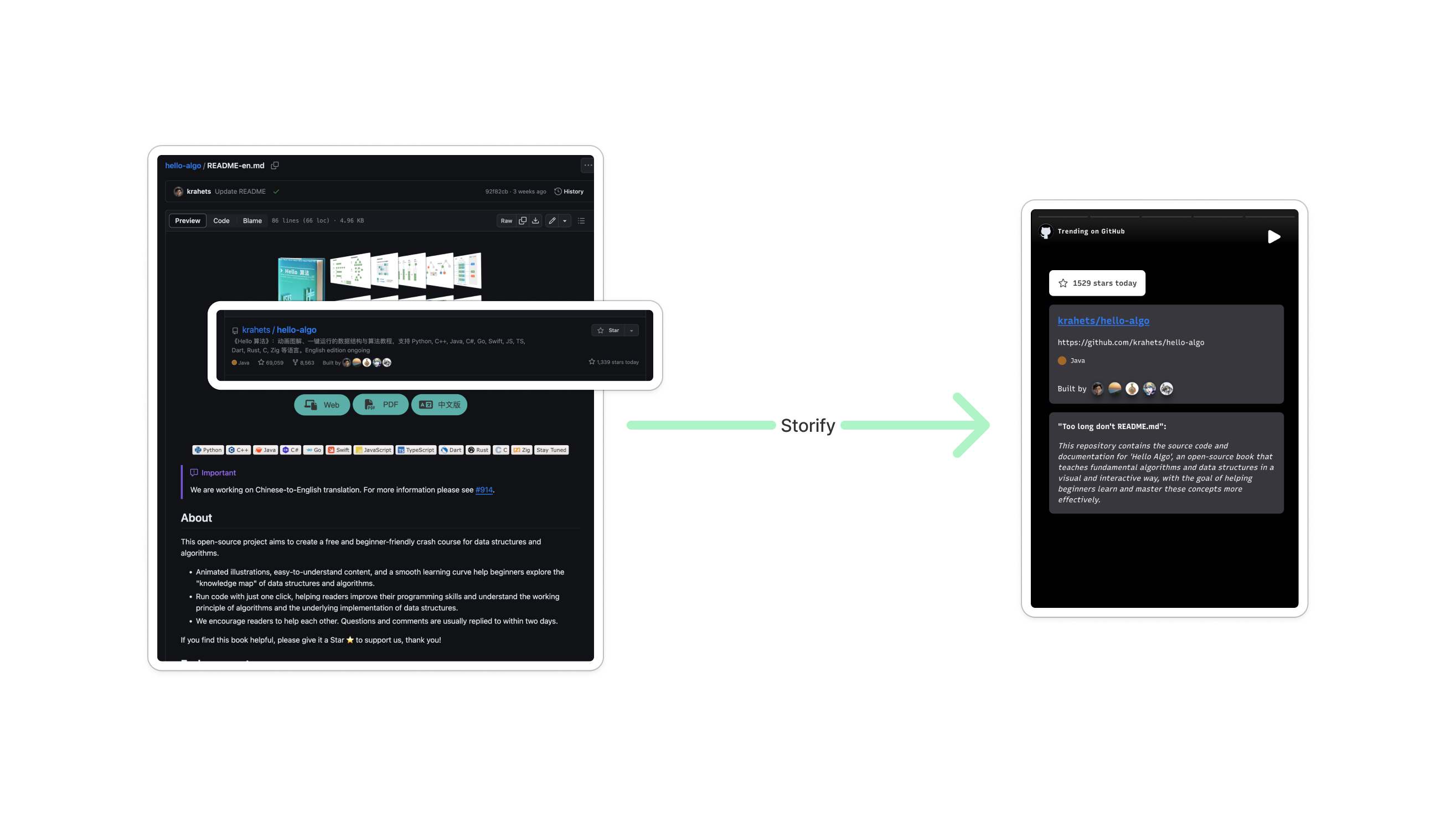

Step 3. Summarising: I prompt AWS Claude 3 and GPT-4 for summarisation of each article that has been ranked and enriched.

Once I get hold of the enriched content I ask the LLMs for a summary giving a different “system prompt” for each one of the sources. For example, for GitHub trending repos, I’ll say something like this:

You are a helpful github repository summariser.

I'll send you the content of a README.md of a github repository and you should summarise it in

one sentence of what the repository does and return it as a json. Keep the summarising sentence under 200 characters if possible

* Be direct to the point to what is the repository's purpose

* Try to highlight what could be this used for.

This is how the response should look like:

{

"summary": [ "This is the summary of the repository" ]

}

Please follow the format above and respond only with a valid json.

I will only summarise the top 5 articles of each source, further in the curation process I can summarise the other 5 if the first ones ranked don’t seem like good content for the daily news.

💡 Up until this point, it was all a ‘script’ - no manual step required apart from pressing a button 🚀

Step 4. Curating: articles might not be so relevant and output from LLMs can be hallucinated or mistaken.

I’ve designed an admin curation page 🪄 that helps me scan through the daily articles and fix any mistakes that have been made in the three initial steps. It also allows me to curate the quality of summaries and chosen news, and I’m able to switch summaries in between GPT-4 and Claude 3 and get the next available news for the source. Sometimes I even delegate that process to great engineer friends.

Here is a 2-minute video of me explaining the newsletter and daily news curating process:

Step 5. Last but not least: Store and display as beautiful stories 💅🏻

I use my storified component to display the daily tech news stories that I’ve summarised and curated organised by source. Lastly, I double check if they still look nice!

As the headline gave away, it costs me a daily average of $0.19 to do this whole process using redundant LLMs. If I didn’t have the “caching”, ranking and enriching step this price would have rocketed to over 20x of this price.

Key lessons

Two short and sweet lessons I’ve taken from this

- Feel the pain of your manual process, be lazy and automate.

- Be mindful of your LLMs services usage, cache and rank to avoid high costs.

Do you want to speed up your newsletter curation process?

We can partner up and do the same for your newsletter. Let’s have a chat (15 minutes and no commitment).

https://calendly.com/joaovitor-martins/chat-about-your-newsletter

Subscribe to TechTok!

Subscribe now to the quickest way to stay up to date with tech news. Replace your daily scrolling through TikTok and Instagram stories with some finite and refined news from TechTok!